Announcing Garden: HPC-ready AI Powered by Globus Compute

October 21, 2025 | Ben Blaiszik

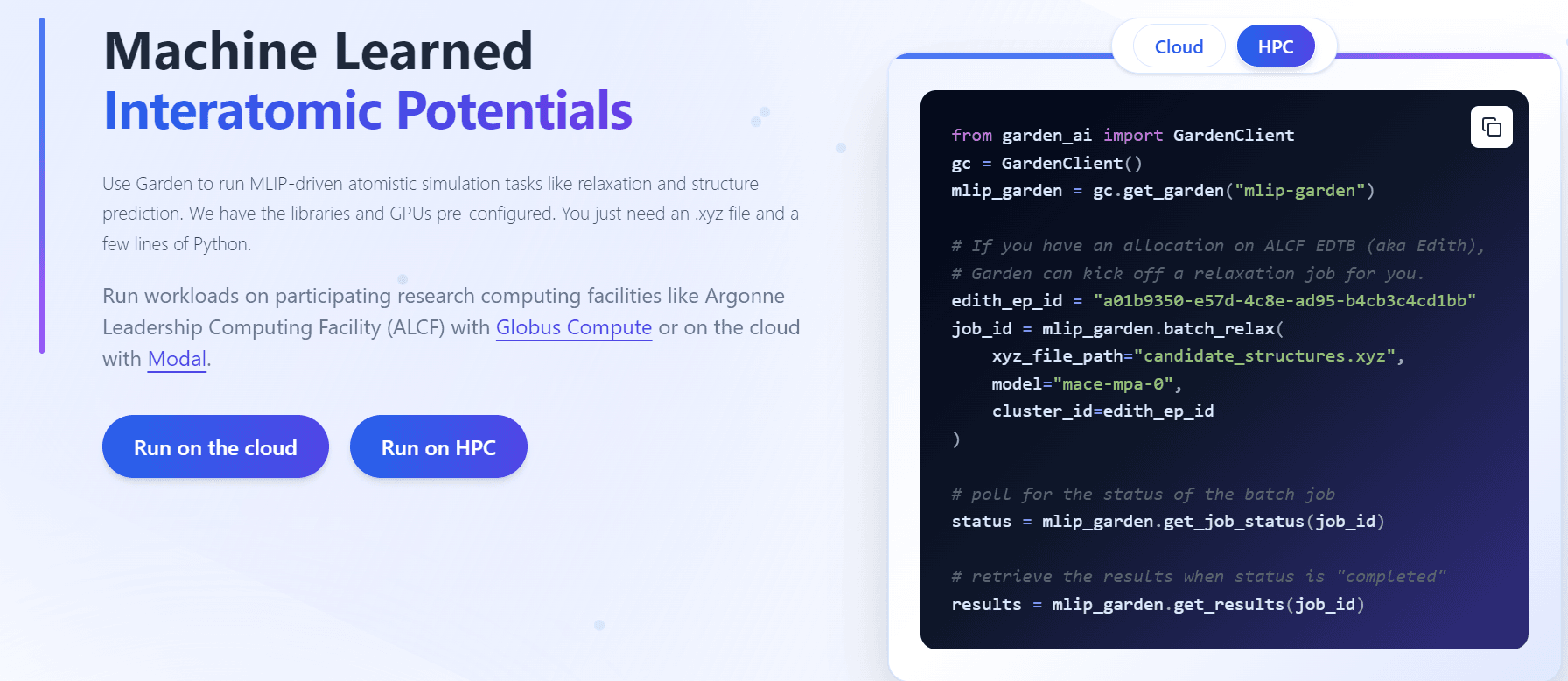

What if publishing and running the best machine learning models including best-in-class machine-learned interatomic potentials (MLIPs) took seconds, not days? That’s a core idea behind Garden, a service that makes it simple for users to find and build upon models, and scale their machine learning tasks across cloud and HPC. Garden is a framework for publishing and running scientific AI models with clear metadata, benchmarks, and one-line execution without local setup (Figure 1). The HPC side of Garden is powered by Globus Compute, so if you already use Globus (or run on a growing list of supported DOE/NSF systems), you can invoke models where your allocations live, without the hassle of setting up containers, dealing with environment drift, or writing ad-hoc job scripts.

What’s in our first release?

While Garden has already collected and published over 100 models in physics, materials science, chemistry, and more, we focus here on a specific use case, simple community access to MLIPs, a class of models showing increased relevance to materials science and chemistry applications.

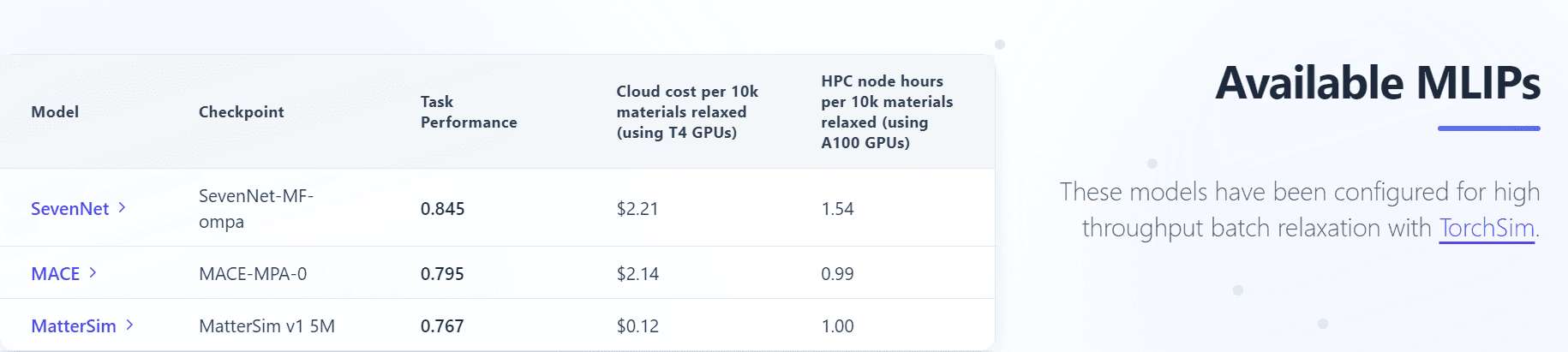

- Models available now: MACE, MatterSim, SevenNet, and more, packaged as callable functions with consistent inputs/outputs.

- Benchmarks: By making these models available through a uniform interface, we were able to directly compare task performance and cost (cloud + HPC) so you can pick the right model for the job (Figure 2).

- Instant trials: Researchers can spin up experiments quickly, free testing on the cloud (via Modal) or run larger on HPC via Globus Compute multi-user endpoints.

You can explore and try out the collection here: https://thegardens.ai/#/use-cases/mlips

Why this matters (especially for Globus users)

MLIPs are reshaping molecular dynamics and materials simulation, but the setup burden, handling different CUDA versions, dealing with dependency management, and other infrastructure issues slows down research significantly. Garden and Globus together remove that friction allowing you to:

- Run where your data and allocations already are. Authenticate with Globus, invoke on a Globus Compute endpoint, and let Garden handle the rest. (Don’t have a Globus Compute endpoint on your system yet? Contact me at blaiszik@uchicago.edu for help setting one up!)

- Lower “time-to-first-result.” Go from idea to trajectories or property predictions in minutes, not days.

Under the hood, improved performance and open access

Building this Garden has allowed us to focus on ensuring performance is high while enabling the community to access these models immediately via free cloud credits, or to run larger campaigns on HPC.

- TorchSim acceleration. Models are served through TorchSim for significant throughput gains on modern GPUs (https://github.com/Radical-AI/torch-sim).

- Hybrid execution. Cloud runs are backed by Modal credits so you can try models without standing up infrastructure; HPC runs flow through Globus Compute on leadership-class systems.

- Open, evolving “gardens.” Each model ships with metadata, examples, and benchmark hooks; leaderboards and automated benchmarks are on the way.

How can you get involved?

We are looking for initial partners, please reach out to blaiszik@uchicago.edu for scientific questions, and the Globus team about getting a Globus Compute endpoint deployed on your local cluster.

Links

Acknowledgements

This work was supported by the National Science Foundation: Award #2209892. This research used resources of the Argonne Leadership Computing Facility, a U.S. Department of Energy (DOE) Office of Science user facility at Argonne National Laboratory and is based on research supported by the U.S. DOEOffice of Science-Advanced Scientific Computing Research Program, under Contract No. DE-AC02-06CH11357.

The Garden team includes partners from UChicago, MIT, University of Wisconsin-Madison, and Argonne National Lab: Owen Price-Skelly, Hayden Holbrook, Will Engler, Rebecca Willett, Dane Morgan, Eliu Huerta, Rafa Gomez Bombarelli Tatem Rios, Nofit Segal, Ben Blaiszik, and Ian Foster.